Overview

Guidelines for adapting the Task Flow

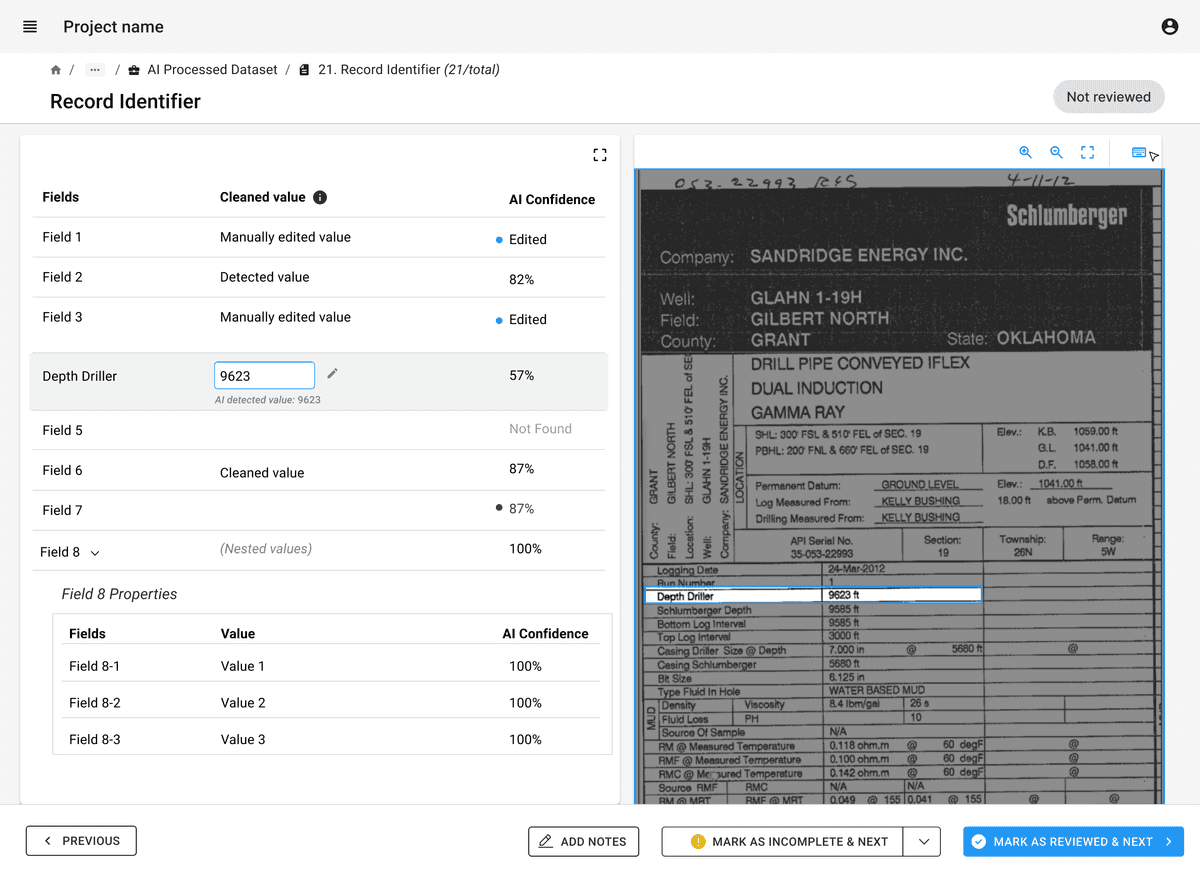

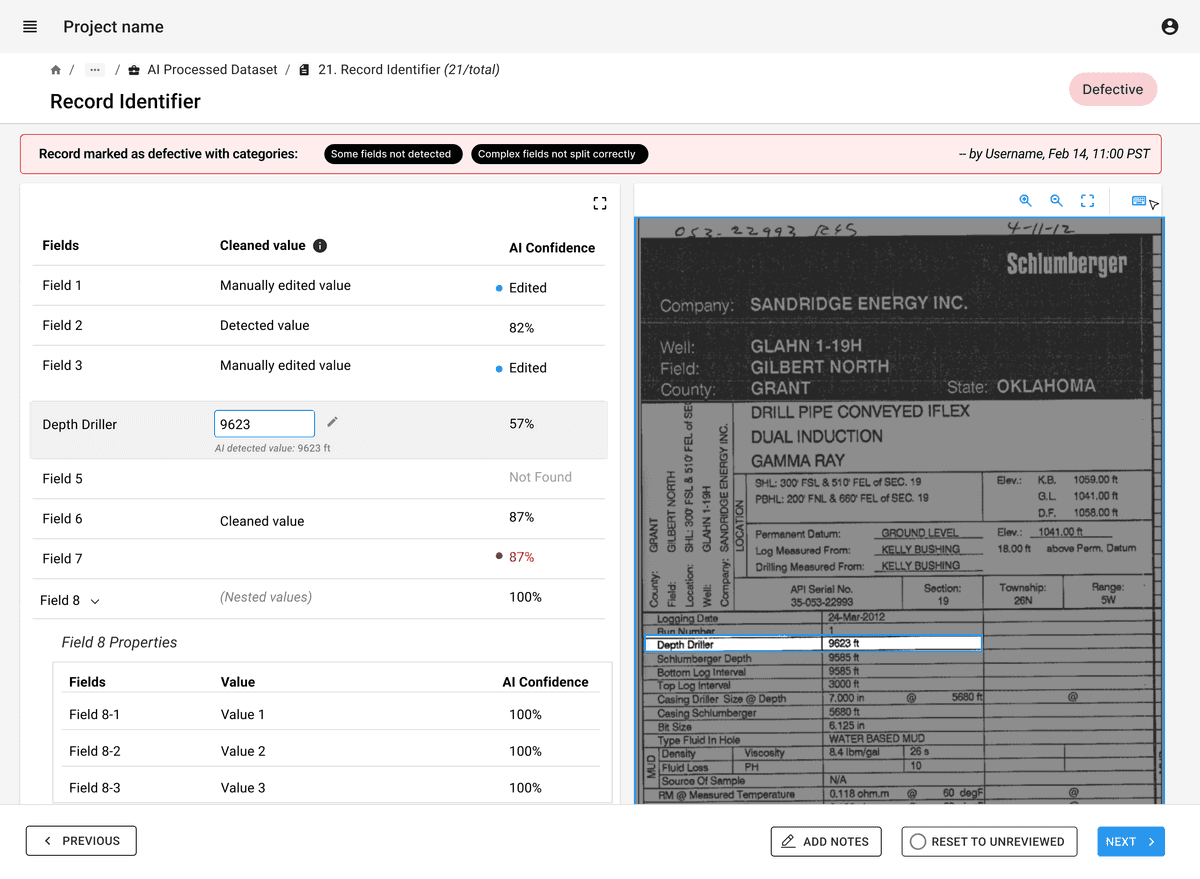

Showing original datafile along side the ai processed data output helps to compare and review effectively.

Evaluate the granularity of review required, whether review is needed for selected sections/ fields or entire datafile. Highlight corresponding sections in both views when hovering or clicking on granular processed data.

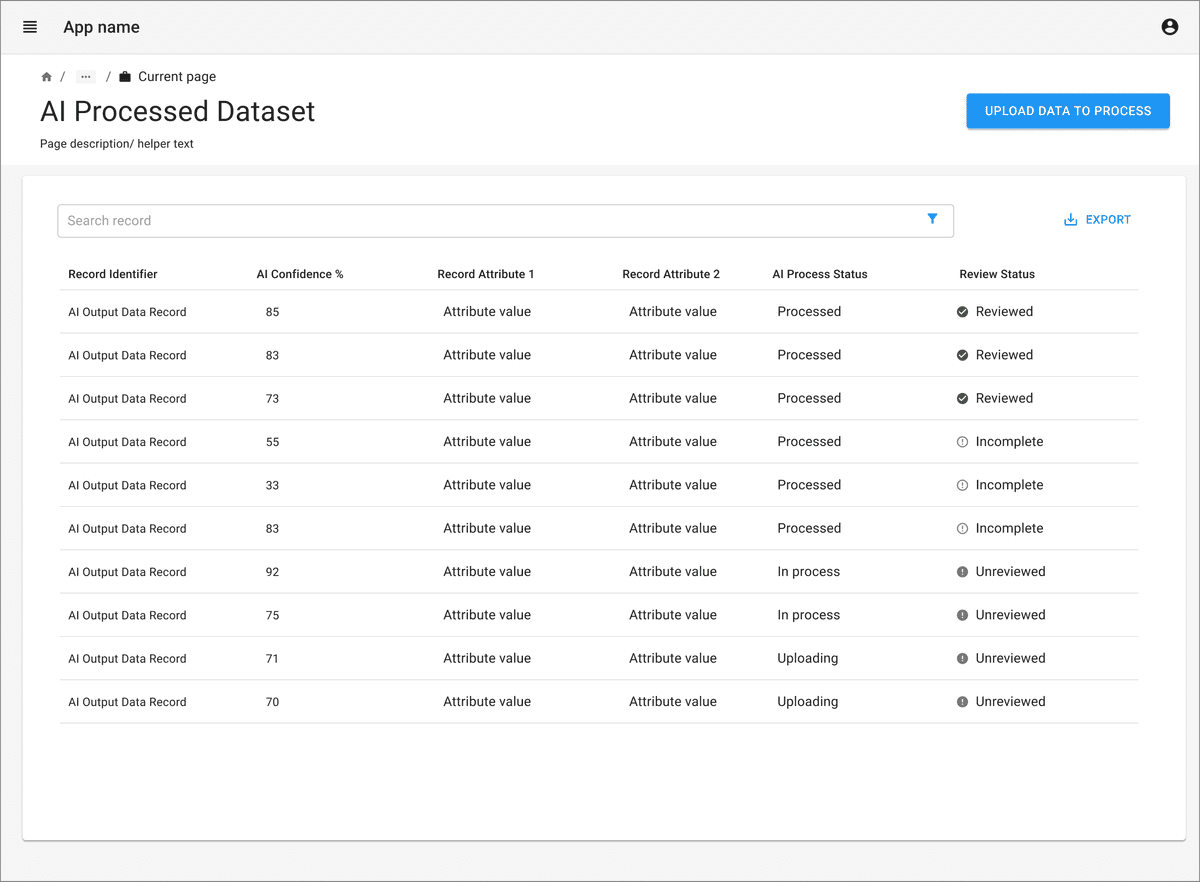

Show confidence levels from AI process and highlight low-confidence areas to enable easy scanning and priority review.

Implement an automated process for data validation and correction to enforce consistent field formats and identification of erroneous or high-priority sections for review.

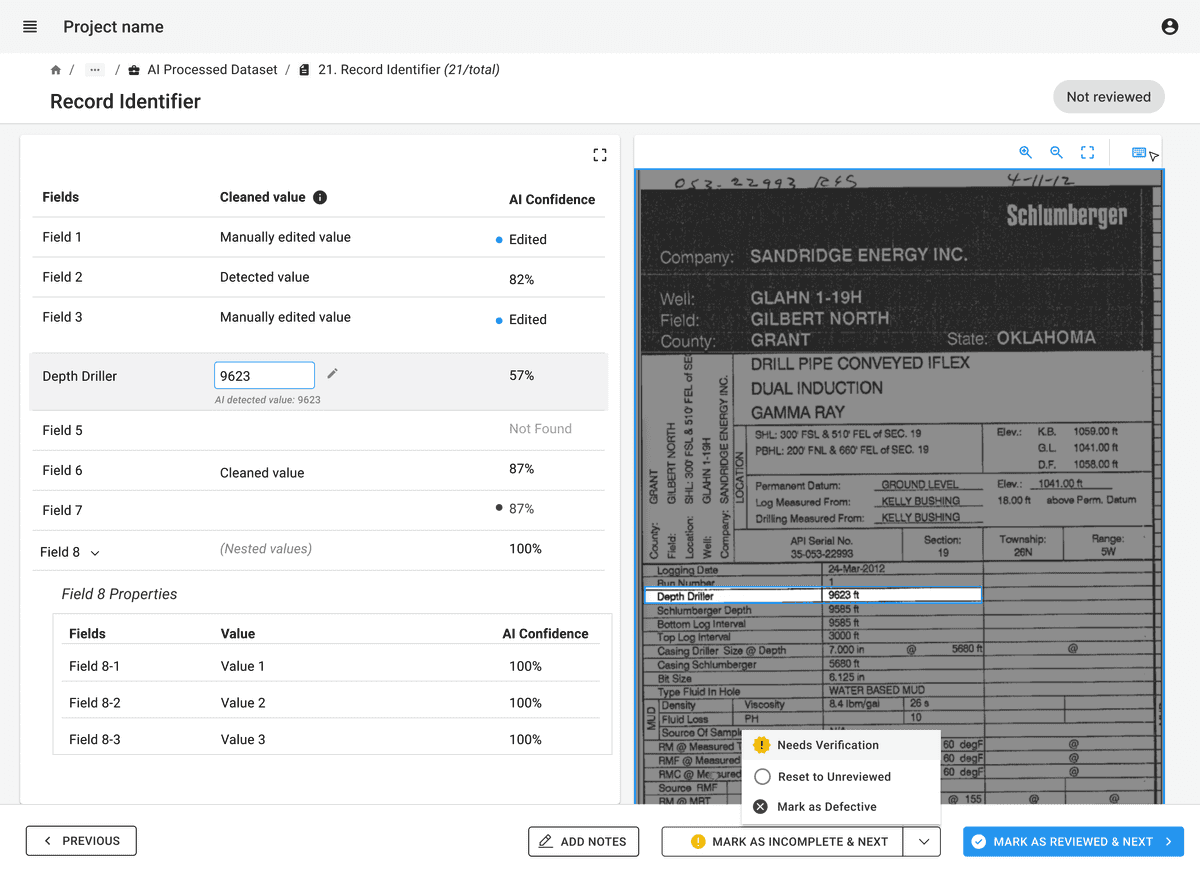

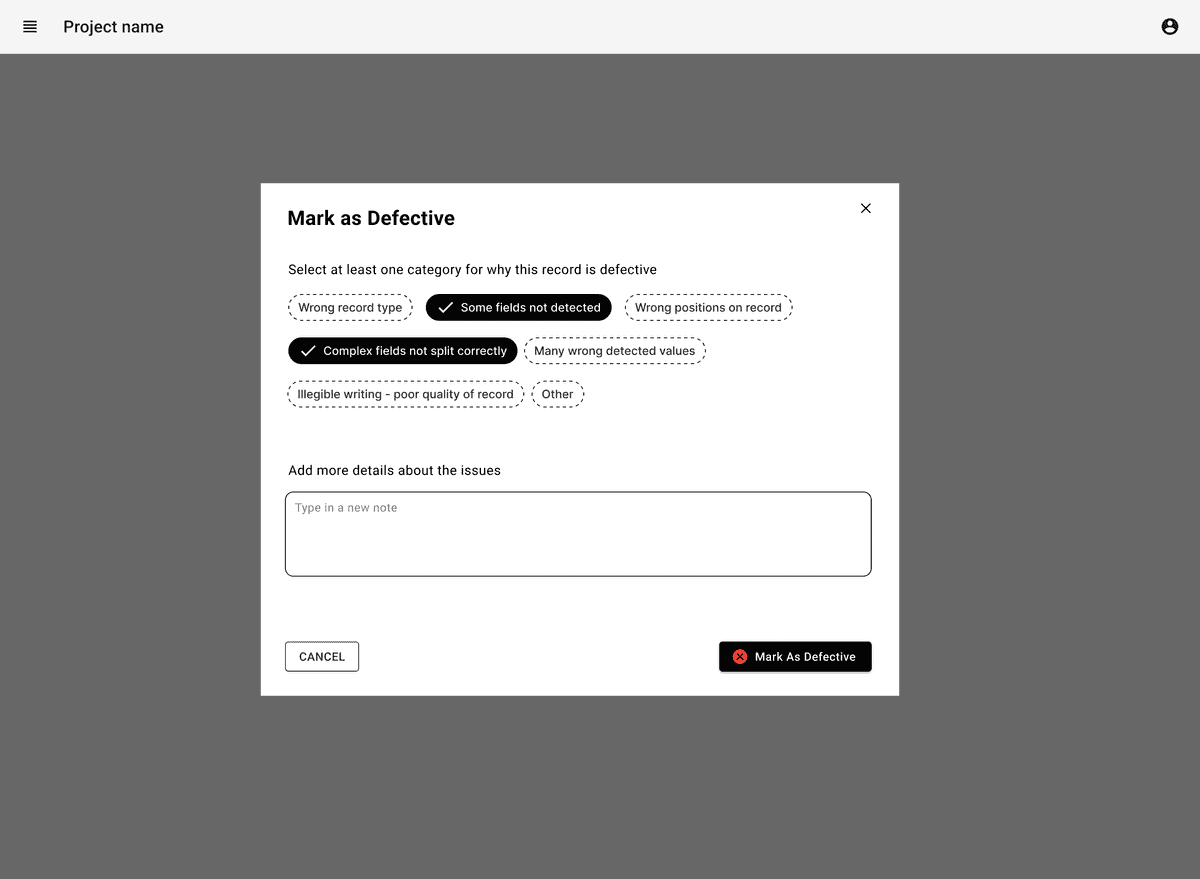

Determine whether the review process requires a secondary reviewer or additional validation steps, and provide actions to flag a data file for a second opinion.

Consider adding keyboard shortcuts for quick navigation and corrections thus speeding the review process for users

Support export of reviewed data in required formats (CSV, JSON, XML, etc.), which can help integration with downstream systems (databases, content management, analytics)